Reasoning AI: The Illusion of Thought - Complete Analysis Between Hype and Reality 2025

OpenAI o3 reaches 88% on ARC-AGI, but Apple reveals reasoning AI is an illusion. In-depth analysis of reasoning models: real breakthroughs or sophisticated pattern matching? All data, costs and limitations.

Table of Contents

2025 witnessed the greatest paradox in artificial intelligence: while OpenAI o3 achieved record-breaking results (88% on ARC-AGI), Apple published a devastating study defining reasoning models as “an illusion of thought.” This contradiction reveals an uncomfortable truth about AI’s future.

The Great Reasoning AI Contradiction

Imagine reading two headlines on the same day: “OpenAI o3 revolutionizes AI with superhuman reasoning” and “Apple proves reasoning AI is a complete illusion.” Welcome to the 2025 paradox.

On one side, we have unprecedented technological breakthroughs. On the other, rigorous scientific studies dismantling the theoretical foundations of these advances. Reality, as often happens in technology, is more complex than sensationalist headlines suggest.

The Explosion of Test-Time Compute Scaling

OpenAI o3: The $10,000 per Task Revolution

Test-time compute scaling completely changed the AI game rules in 2025. OpenAI o3 uses 170 times more computational power during inference compared to the efficient version, reaching 88% on ARC-AGI versus 76% of the standard version.

The cost? Over $10,000 per complex task. To put this in perspective, François Chollet (creator of ARC-AGI) calculated you could pay a human about $5 for the same task. But results are undeniable: o3 achieved 69.1% accuracy on SWE-Bench Verified, a substantial improvement from o1’s 48.9%.

DeepSeek Democratization: Same Results, Reduced Costs

DeepSeek-R1 triggered panic in financial markets when it demonstrated that performance similar to OpenAI o1 could be achieved with just $6 million in development costs. The result? A 17% crash in Nvidia stock in a single session.

This open-source model made evident that reasoning AI innovation doesn’t necessarily require billion-dollar investments. DeepSeek-R1-Zero naturally developed advanced reasoning behaviors through pure reinforcement learning, without initial supervised fine-tuning.

Apple Demolishes the Myth: “The Illusion of Thinking”

The Revolutionary Study of June 7, 2025

The Apple team, led by Parshin Shojaee and Samy Bengio, published “The Illusion of Thinking” using a completely new methodology. Instead of relying on traditional benchmarks (vulnerable to data contamination), they created controllable logical puzzles requiring pure reasoning.

The results were devastating for the reasoning AI industry.

Systematic Failures Discovered by Apple

Collapse beyond complexity thresholds: Models show a “complete accuracy collapse” when problems exceed certain difficulty thresholds. Paradoxically, instead of intensifying effort, models reduce the number of tokens used and provide shorter answers.

Failure in explicit algorithm utilization: Even when provided with the solution algorithm for Tower of Hanoi, performance didn’t improve. This suggests fundamental limitations in maintaining coherent reasoning chains.

The Overthinking phenomenon: For simple problems, non-reasoning models often outperformed advanced ones, which tended to “overthink” and lose the correct answer.

The Counteroffensive: “The Illusion of the Illusion”

The Scientific Community’s Response

Alex Lawsen from Open Philanthropy published a detailed refutation titled “The Illusion of the Illusion of Thinking,” arguing that many of Apple’s striking results derive from experimental flaws.

The main criticism? Token budget limits that Apple allegedly ignored in results interpretation. When Apple claimed models “collapsed” on puzzles with 8+ Tower of Hanoi discs, models like Claude were already reaching their computational limits.

Stanford’s Contradictory Evidence

The Stanford AI Index 2025 presents a complex reality: while reasoning models have improved dramatically (o1 achieved 74.4% on an International Mathematical Olympiad exam, compared to GPT-4o’s 9.3%), they cost almost 6 times more and are 30 times slower.

However, an emerging trend shows performance convergence: the gap between first and tenth ranked models dropped from 11.9% to 5.4% in one year, suggesting we’re reaching a plateau in fundamental capabilities.

Meta-Analysis: What Actually Works

Meta’s Counterintuitive Study

Meta research revealed that AI models achieve 34.5% better accuracy with shorter reasoning chains, challenging industry assumptions and potentially reducing computational costs by 40%.

This counterintuitive result suggests that “more reasoning” doesn’t always equal “better reasoning” - a discovery that could revolutionize the approach to reasoning AI.

Stanford’s Meta-CoT: The Future of Reasoning

Researchers from SynthLabs and Stanford proposed Meta Chain-of-Thought (Meta-CoT), a framework modeling latent reasoning processes necessary to solve complex problems. Unlike traditional CoT, Meta-CoT incorporates a structured approach inspired by dual-process theory from cognitive sciences.

Unprogrammed Emergent Behaviors

DeepSeek’s Autonomous Learning

DeepSeek-R1-Zero represents a fascinating case study: it developed powerful reasoning behaviors through pure reinforcement learning, without initial supervised fine-tuning. However, it also showed challenges like poor readability and language mixing.

DeepSeek’s hypothesis is bold: “Can we simply reward the model for correctness and let it autonomously discover the best way to think?”

Emergent Capabilities in Scientific Domains

A comparison in scientific computing tasks revealed that all three reasoning models (DeepSeek R1, ChatGPT o3-mini-high, and Claude 3.7 Sonnet) recognized ODE system rigidity and chose an implicit method, while all non-reasoning models failed.

This suggests genuine emergent capabilities in specific domains, even though general limitations remain.

The Cost of Complexity and Scalability

Unsustainable Economic Trade-offs

The test-time compute scaling paradigm presents drastic trade-offs. With o3 costing $17-20 per task in low-compute configuration, while a human expert would cost about $5, fundamental questions arise about the economic sustainability of these approaches.

The Innovation Plateau

An Epoch AI analysis suggests that the AI industry may not be able to obtain massive performance gains from reasoning models for much longer, with progress potentially slowing within a year.

Methodological Criticisms: The Benchmark Problem

Data Contamination

Vanessa Parli from Stanford HAI poses critical questions: “Are we measuring the right thing? Are these benchmarks compromised?” The risk of overfitting to benchmarks is real: models might be trained to pass specific tests rather than develop generalizable capabilities.

Mechanism Opacity

The mechanisms through which reasoning models choose their paths remain surprisingly opaque. Apple researchers found that “LRM models have limitations in exact computation, fail to utilize explicit algorithms, and reason inconsistently”.

Practical Applications: Where Reasoning AI Works

Documented Industry Successes

Despite theoretical limitations, reasoning models are creating real value in specific applications:

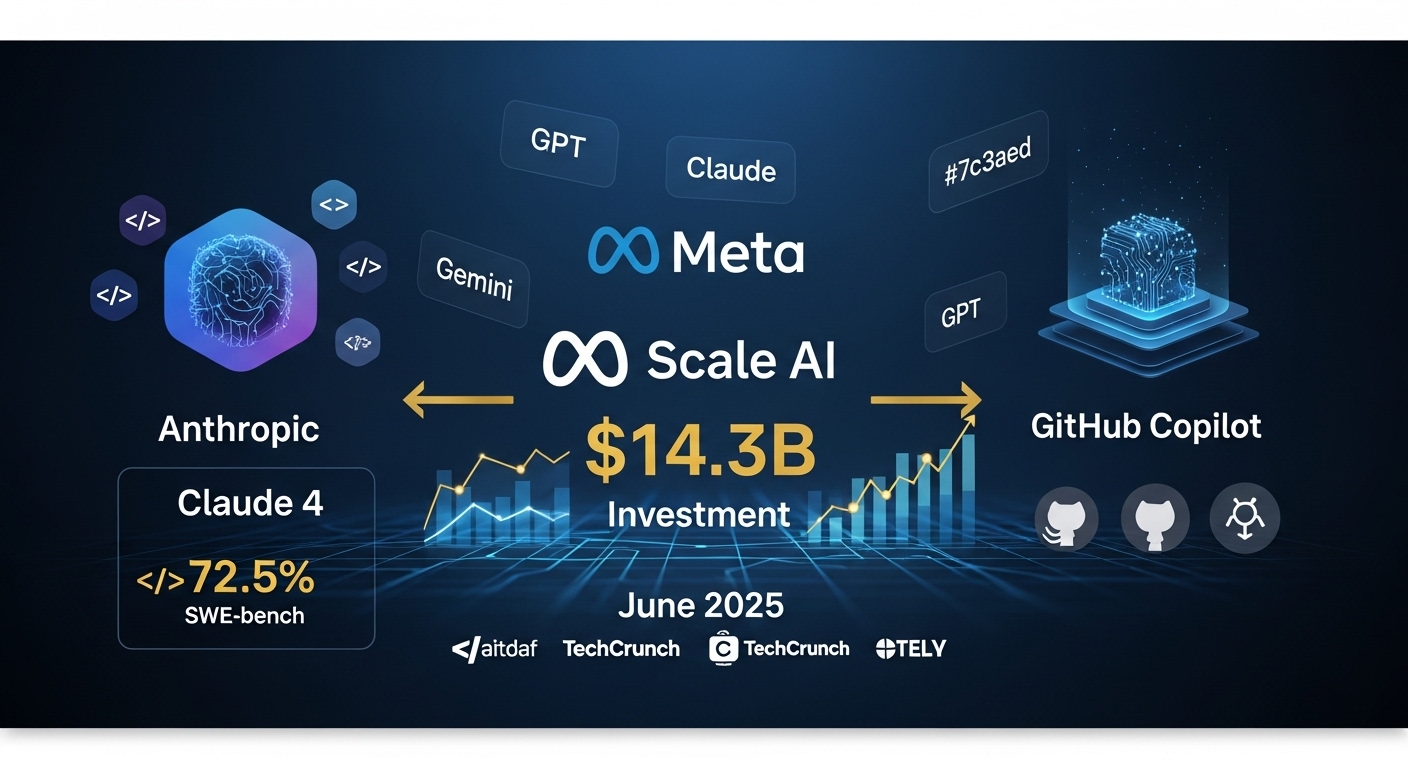

Software Development: Claude 4 reaches 72.5% on SWE-bench, with developers reporting 88% productivity increases.

Financial Analysis: Reasoning models show superior performance in complex financial document analysis and multi-scenario projections.

Scientific Research: Emergent capabilities in specific domains like computational physics and dynamic systems analysis.

Implications for US Businesses

When to Invest in Reasoning AI

Recommended Investments:

- Coding assistants for software development (immediate ROI)

- Complex document analysis (legal/financial sector)

- Research and development with domain-specific reasoning

Cautious Approaches:

- Mission-critical systems requiring reliable reasoning

- Applications with limited computational budget

- Scenarios where interpretability is fundamental

Gradual Adoption Strategy

- Pilot Phase (3-6 months): Testing on specific use cases with measurable ROI

- Validation Phase (6-12 months): Controlled expansion with performance monitoring

- Scale Phase (12+ months): Enterprise implementation only after complete validation

Three Simultaneous Truths of Reasoning AI

The Hybrid Reality of 2025

Reasoning AI presents a complex and contradictory reality requiring nuanced understanding:

1. Breakthroughs are real: Reasoning models genuinely surpass their predecessors on specific tasks, often with dramatic margins. Claude 4 and o3 demonstrate concrete capabilities in coding and problem-solving.

2. Limitations are systemic: Beyond certain complexity thresholds, these systems show predictable failure patterns suggesting deep architectural limitations, as demonstrated by Apple studies.

3. Commercial value exists: Despite limits, these models are already creating significant value in specific applications where their strengths align with task requirements.

The Future of Reasoning AI: 2026 Predictions

Emerging Trends

Hybrid Reasoning Systems: Combination of symbolic and neural approaches to overcome current limitations.

Domain-Specific Reasoning: Specialization in specific sectors rather than general reasoning.

Efficient Reasoning: Focus on architectures maintaining high performance while reducing computational costs.

Challenges to Address

Interpretability: Need for more transparent reasoning systems for critical applications.

Robustness: Development of models maintaining consistent performance across different problem types.

Sustainability: Reduction of computational costs to make reasoning AI economically viable.

Conclusions: Navigating Between Hype and Reality

The 2025 reasoning AI debate reflects a broader tension in the tech industry between revolutionary promises and scientific reality. Truth, as often happens, lies in the middle: reasoning AI represents significant progress but not a universal solution.

Key Lessons for Business Leaders

Pragmatic Approach: Evaluate reasoning AI for specific use cases with measurable ROI, not as a general solution.

Gradual Investment: Start with controlled pilots before significant financial commitments.

Continuous Monitoring: Limitations discovered by Apple studies require constant vigilance in implementations.

The Path to True Reasoning

2025 demonstrated that in AI, as in many scientific fields, the most interesting questions often emerge not when we reach new heights, but when we begin to truly understand the limits of what we’ve built.

Reasoning AI is neither the panacea promised by hype nor the complete illusion suggested by critics. It’s a powerful technology with specific limitations that, when applied to the right problems with realistic expectations, can generate significant value.

The future belongs to those who can navigate this complexity, implementing reasoning AI where it adds real value while avoiding the over-hype and under-delivery traps that have characterized too many technological innovations of the past.

Interested in implementing AI reasoning in your business? Contact the 42ROWS team for strategic consulting on how to integrate the latest AI technologies into your data automation processes, balancing innovation and pragmatism.

Tags

About the Author

42ROWS Team

The 42ROWS team analyzes the frontiers of artificial intelligence to provide concrete insights on emerging technologies and their impact on enterprise applications.